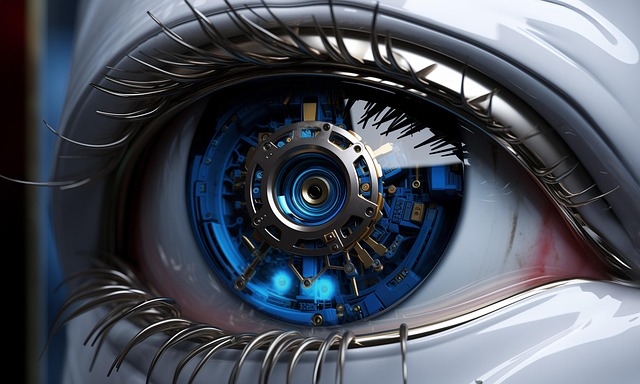

In the rapidly evolving digital age, where AI assistants are becoming integral to daily routines, transparency is paramount for user trust. Ethical guidelines emphasizing transparency in information processing, decision-making, and data usage are crucial for fair and accountable interactions between humans and technology. Developers must create robust standards addressing privacy, fairness, and consent to ensure responsible AI assistant development, public trust, and equitable use while adhering to ethical principles. Continuous improvement based on user feedback and evolving societal expectations is key to maintaining these assistants as reliable, trustworthy tools.

In today’s digital era, AI assistants are becoming ubiquitous, enhancing our daily lives. However, their increasing prevalence raises crucial questions about transparency. Understanding the need for transparency in AI assistants is paramount to building trust and mitigating ethical concerns. This article explores crafting robust ethical guidelines for responsible development, ensuring compliance, and promoting continuous improvement through transparency measures, ultimately fostering a vibrant and accountable AI assistant landscape.

- Understanding the Need for Transparency in AI Assistants

- Crafting Ethical Guidelines for Responsible AI Assistant Development

- Ensuring Compliance and Continuous Improvement Through Transparency Measures

Understanding the Need for Transparency in AI Assistants

In today’s digital era, where AI assistants are becoming an integral part of our daily lives, ensuring transparency has become paramount. These intelligent systems, designed to understand and assist users, must be held accountable for their actions and decisions. Transparency is not just a moral imperative but a cornerstone of building user trust and fostering healthy interactions between humans and technology.

Without clear communication about how AI assistants process information, make recommendations, and arrive at conclusions, users might feel misled or uncertain about the reliability of the assistance they receive. Moreover, ethical guidelines that emphasize transparency encourage developers to design systems that can explain their reasoning, enabling users to understand and, if necessary, challenge the outcomes. This ensures that AI assistants operate within a framework of accountability, promoting fairness and minimizing potential biases.

Crafting Ethical Guidelines for Responsible AI Assistant Development

The development of AI assistants, while exciting, comes with a unique set of ethical considerations that demand careful attention. Crafting comprehensive ethical guidelines is essential to ensure responsible and transparent AI assistant creation. These guidelines should address key areas such as data privacy, algorithmic fairness, and user consent, among others.

By establishing clear standards, developers can mitigate potential harms, build trust with users, and promote the fair and equitable use of AI assistants. Such guidelines will serve as a roadmap for responsible innovation, ensuring that these intelligent systems enhance human experiences while upholding ethical principles.

Ensuring Compliance and Continuous Improvement Through Transparency Measures

Ensuring compliance with ethical guidelines is a cornerstone in fostering public trust for AI assistants. By implementing transparent practices, developers can demonstrate their commitment to responsible AI development and deployment. This includes revealing data sources, algorithms used, and potential biases inherent in the system. Such openness allows users to understand how decisions are made, enhancing accountability.

Continuous improvement is another benefit of prioritizing transparency. Regularly updating guidelines and measures based on user feedback and emerging ethical considerations ensures that AI assistants evolve to meet evolving societal expectations. This iterative process encourages innovation while maintaining integrity, ultimately positioning AI technology as a reliable and ethical tool for users.